[ Note: Click here for the Docker Monitoring webinar video recording and slides. And click here for the Docker Logging webinar video recording and slides. ]

——-

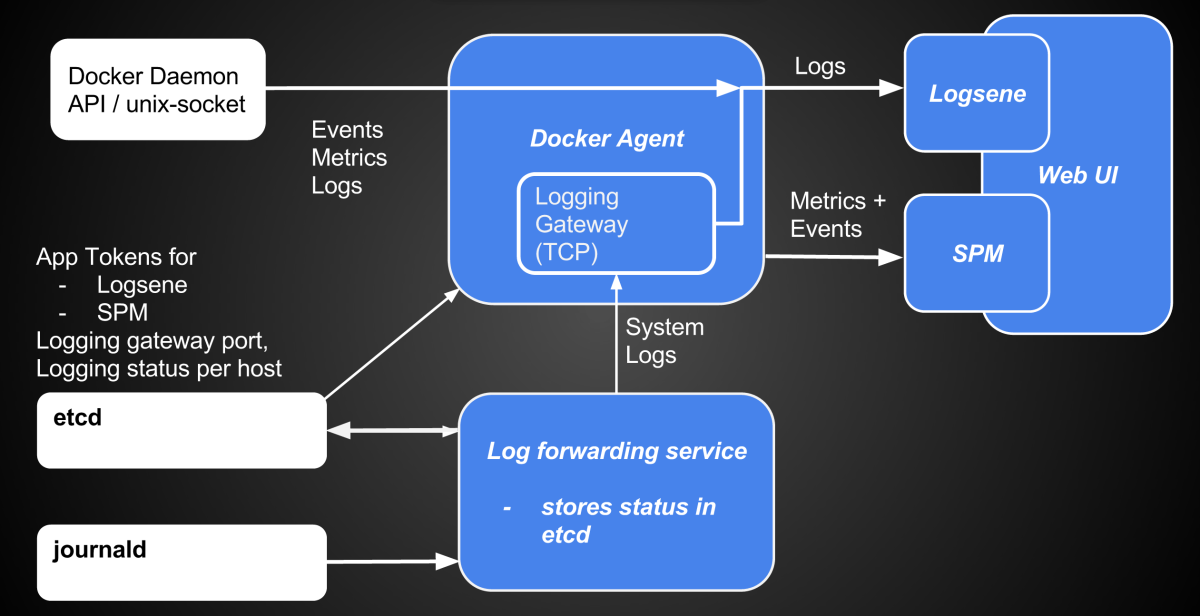

Containers and Docker are all the rage these days. In fact, containers — with Docker as the leading container implementation — have changed how we deploy systems, especially those comprised of micro-services. Despite all the buzz, however, Docker and other containers are still relatively new and not yet mainstream. That being said, even early Docker adopters need a good monitoring tool, so last month we added Docker monitoring to SPM. We built it on top of spm-agent – the extensible framework for Node.js-based agents and ended up with sematext-agent-docker.

Monitoring of Docker environments is challenging. Why? Because each container typically runs a single process, has its own environment, utilizes virtual networks, or has various methods of managing storage. Traditional monitoring solutions take metrics from each server and application they run. These servers and the applications running on them are typically very static, with very long uptimes. Docker deployments are different: a set of containers may run many applications, all sharing the resources of a single host. It’s not uncommon for Docker servers to run thousands of short-term containers (e.g., for batch jobs) while a set of permanent services runs in parallel. Traditional monitoring tools not used to such dynamic environments are not suited for such deployments. SPM, on the other hand, was built with this in mind. Moreover, container resource sharing calls for stricter enforcement of resource usage limits, an additional issue you must watch carefully. To make appropriate adjustments for resource quotas you need good visibility into any limits containers have reached or errors they have caused. We recommend using alerts according to defined limits; this way you can adjust limits or resource usage even before errors start happening.

How do we get a detailed metrics of each container?

Docker provides a remote interface for container stats (by default exposed via UNIX domain socket). The SPM agent for Docker uses this interface to collect Docker metrics.

SPM Docker Agent monitoring other containers, itself running in a Docker container

How to deploy monitoring for Docker

There are several ways one can run a Docker monitor, including:

- run it directly on the host machine (“Server” in the figure above)

- run one agent for multiple servers

- run agent in a container (along containers it monitors) on each server

SPM uses approach 3), aka the “Docker Way”. Thus, SPM for Docker is provided as a Docker Image. This makes the installation easy, requires no installation of dependencies on the host machine compared to approach 1), and it requires no configuration of a server list to support multiple Docker servers.

How to install SPM for Docker

It’s very simple: Create an SPM App of type “Docker” to get the SPM application token (for $TOKEN, see below), and then run:

docker pull sematext/sematext-agent-docker anddocker run -d -v /var/run/docker.sock:/var/run/docker.sock -e SPM_TOKEN=$TOKEN sematext/sematext-agent-docker

You’ll see your Docker metrics in SPM after about a minute.

SPM for Docker – Features

If you already know SPM then you’re aware that each SPM integration supports all SPM features. If, however, you are new to SPM, this summary will help:

- Out-of-the-box Dashboards and unlimited custom Dashboards

- Multi-user support with role-based access control, application and account sharing

- Threshold-based Alerts on all metrics mentioned above including Custom Metrics

- Machine learning-based Anomaly Detection on all metrics, including Custom Metrics

- Alerting via email, PagerDuty, Nagios and Webhooks (e.g. Slack, HipChat)

- Email subscriptions for scheduled Performance Reports

- Secure sharing of graphs and reports with your team, or with the public

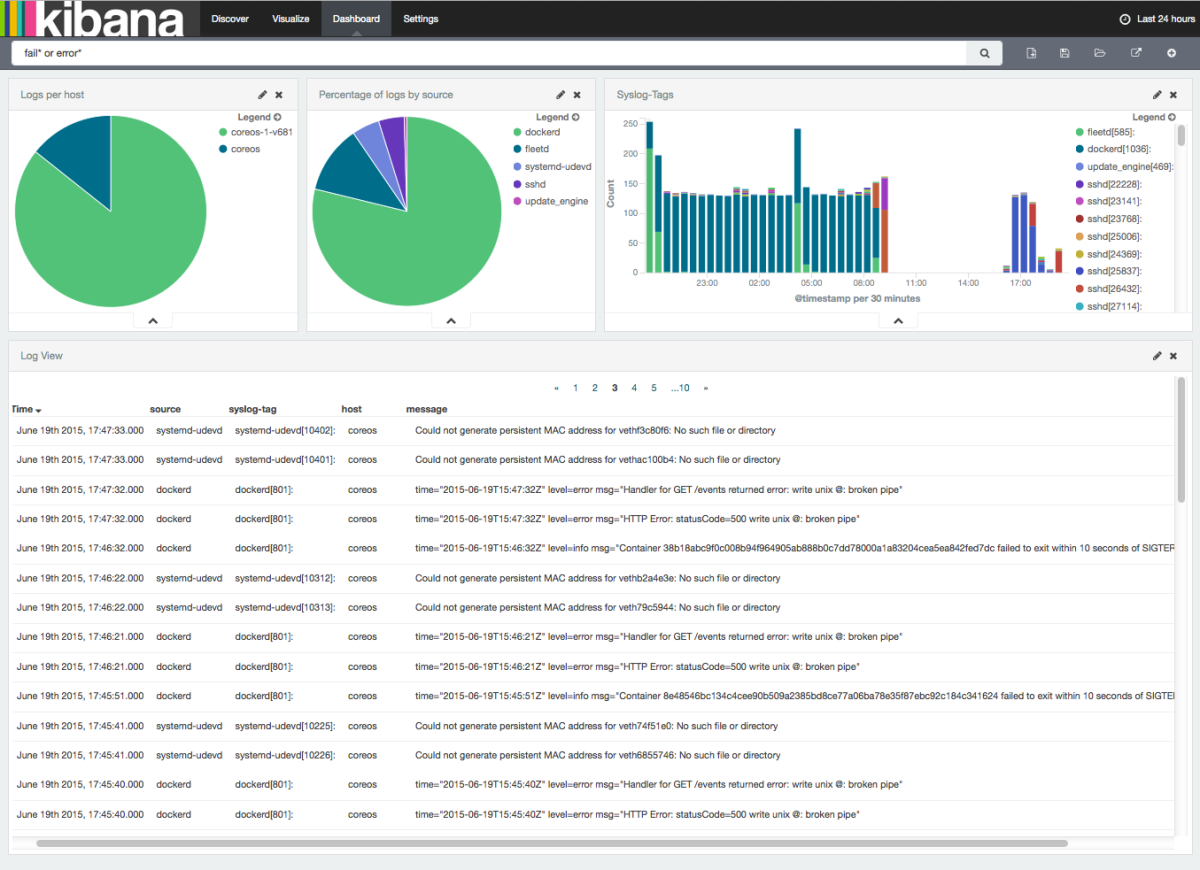

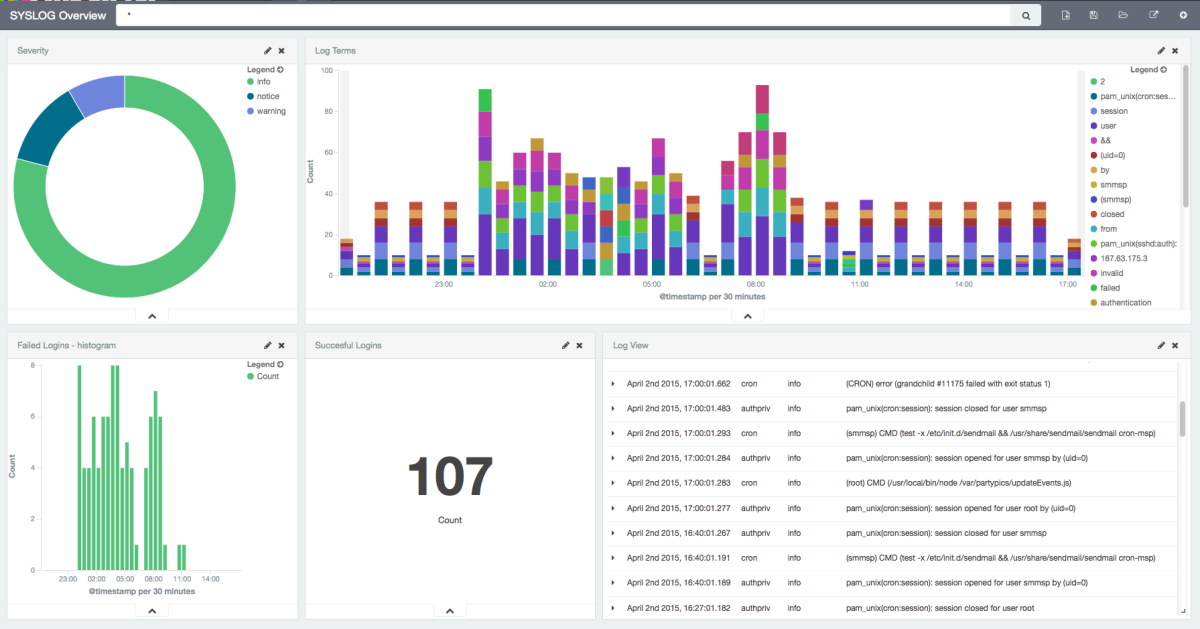

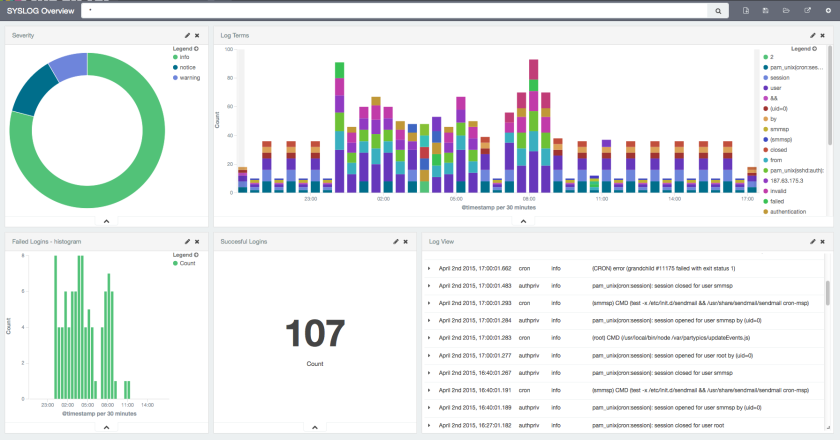

- Correlation with logs shipped to Logsene

- Charting and correlation with arbitrary Events

Let’s continue with the Docker-specific part:

- Easy to install docker agent

- Monitoring of multiple Docker Hosts and unlimited number of Containers per ‘SPM Docker App’

- Predefined Dashboards for all Host and Container metrics

- OS Metrics of the Docker Host

- Detailed Container Metrics

- CPU

- Memory

- Network

- I/O Metrics

- Limits of Resource Usage

- CPU throttled times

- Memory limits

- Fail counters (e.g., for memory allocation and network packets)

- Filter and aggregations by Hosts, Images, Container IDs, and Tags

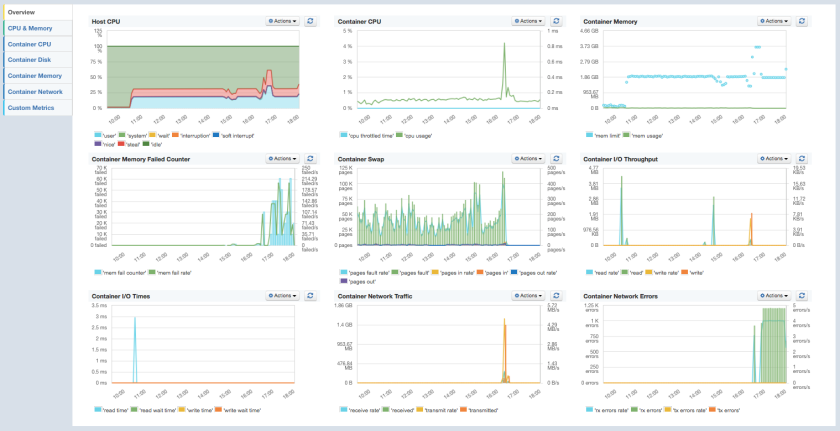

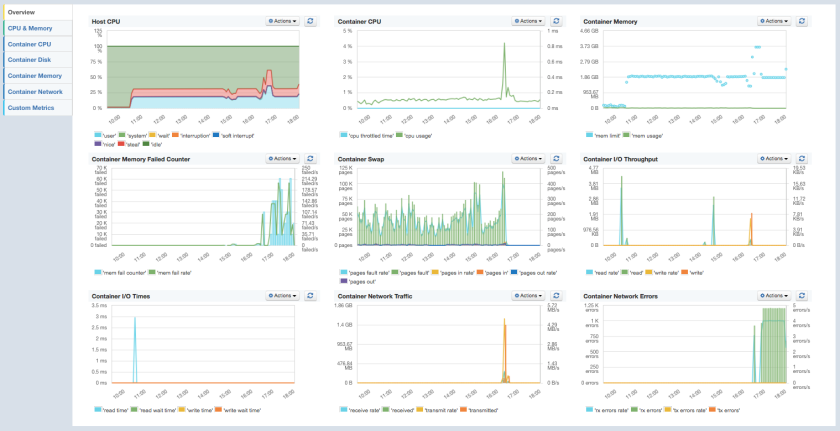

SPM for Docker – Predefined Dashboard ‘Overview’

SPM for Docker – Predefined Dashboard ‘Overview’

Containerized applications typically communicate with other applications via the exposed network ports; that’s why network metrics are definitely on the hot list of metrics to watch for Docker and a reason to provide such detailed Reports in SPM:

Did you enjoy this little excursion on Docker monitoring? Then it’s time to practice it!

We appreciate feedback of early adopters, so please feel free to drop us a line, DM us on Twitter @sematext or chat with us using the web chat in SPM or on our homepage — we are here to get your monitoring up and running. If you are a startup, get in touch – we offer discounts for startups!