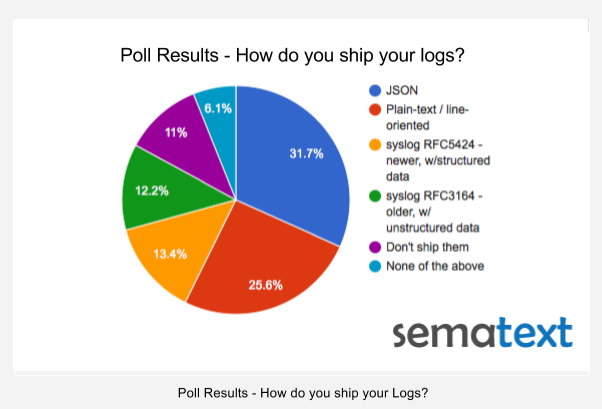

The results for the log shipping formats poll are in. Thanks to everyone who took the time to vote!

The distribution pie chart is below, but we can summarize it for you here:

- JSON won pretty handily with 31.7% of votes, which was not totally unexpected. If anything, we expected to see more people shipping logs in JSON. One person pointed out GELF, but GELF is really just specific JSON structure over Syslog/HTTP, so GELF falls in this JSON bucket, too.

- Plain-text / line-oriented log shipping is still popular, clocking in with 25.6% of votes. It would be interesting to see how that will change in the next year or two. Any guesses? For those who are using Logstash for shipping line-oriented logs, but have to deal with occasional multi-line log events, such as exception stack traces, we’ve blogged about how to ship multi-line logs with Logstash.

- Syslog RFC5424 (the newer one, with structured data in it) barely edged out its older brother, RFC3164 (unstructured data). Did this surprise anyone? Maybe people don’t care for structured logs as much as one might think? Well, structure is important, as we’ll show later today in our Docker Logging webinar because without it you’re limited to mostly “supergrepping” your logs, not really getting insight based on more analytical type of queries on your logs. That said, the two syslog formats together add up to 25%! Talk about ancient specs holding their ground against newcomers!

- There are still some of people out there who aren’t shipping logs! That’s a bit scary! 🙂 Fortunately, there are lot of options available today, from the expensive On Premises Splunk or DIY ELK Stack, to the awesome Logsene, which is sort of like ELK Stack on steroids. Look at log shipping info to see just how easy it is to get your logs off of your local disks, so you can stop grepping them. If you can’t live without the console, you can always use logsene-cli!

Similarly, if your organization falls in the “Don’t ship them” camp (and maybe even “None of the above” as well, depending on what you are or are not doing) then — if you haven’t done so already — you should give some thought to trying a centralized logging service, whether running within your organization or a logging SaaS like Logsene, or at least DIY ELK.