This is the second part of a 3-part post series in which we describe how we use HBase at Sematext for real-time analytics with an append-only updates approach.

In our previous post we explained the problem in detail with the help of example and touched on the suggested solution idea. In this post we will go through solution details as well as briefly introduce the open-sourced implementation of the described approach.

Suggested Solution

Suggested solution can be described as follows:

- replace update (Get+Put) operations at write time with simple append-only writes

- defer processing updates to periodic compaction jobs (not to be confused with minor/major HBase compaction)

- perform on the fly updates processing only if user asks for data earlier than updates compacted

Before (standard Get+Put updates approach):

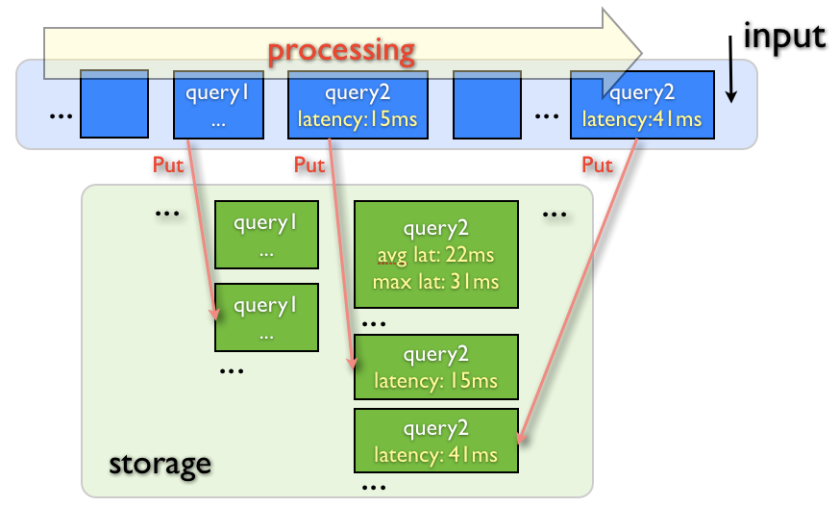

The picture below shows an example of updating search query metrics that can be collected by Search Analytics system (something we do at Sematext).

- each new piece of data (blue box) is processed individually

- to apply update based on the new piece of data:

- existing data (green box) is first read

- data is changed and

- written back

After (append-only updates approach):

1. Writing updates:

2. Periodic updates processing (compacting):

3. Performing on the fly updates processing (only if user asks for data earlier than updates compacted):

Note: the result of the processing updates on the fly can be optionally stored back right away, so that next time same data is requested no compaction is needed.

The idea is simple and not a new one, but given the specific qualities of HBase like fast range scans and high write throughput it works especially well with HBase. So, what we gain here is:

- high update throughput

- real-time updates visibility: despite deferring the actual updates processing, user always sees the latest data changes

- efficient updates processing by replacing random Get+Put operations with processing sets of records at a time (during the fast scan) and eliminating redundant Get+Put attempts when writing the very first data item

- better handling of update load peaks

- ability to roll back any range of updates

- avoid data inconsistency problems caused by tasks that fail after only partially updating data in HBase without doing rollback (when using with MapReduce, for example)

High Update Throughput

Higher update throughput is achieved by not doing Get operation for every record update. Thus, Get+Put operations are replaced with Puts (which can be further optimized by using client-side buffer), which are really fast in HBase. The processing of updates (compaction) is still needed to perform the actual data merge, but it is done much more efficiently than doing Get+Put for each update operation (see below more details).

Real-time Updates Visibility

Users always see latest data changes. Even if updates have not yet been processed and still stored as a series of records, they will be merged on the fly.

By doing periodic merges of appended records we ensure data that has to be processed on the fly is small enough and does fast.

Efficient Updates Processing

- N Get+Put operations at write time are replaced with N Puts + 1 Scan (shared) + 1 Put operations

- Processing N changes at once is usually more effective than applying N individual changes

Let’s say we got 360 update requests for the same record: e.g. record keeps track of some sensor value for 1 hour interval and we collect data points every 10 seconds. These measurements needs to be merged in a single record that represents the whole 1-hour interval. Initially we would perform 360 Get+Put operations (while we can use some client-side buffer to perform partial aggregation and reduce the number of actual Get+Put operations, we want data to be sent immediately as it arrives instead of asking user to wait 10*N seconds). With append-only approach, we will perform 360 Put operations, 1 scan (which is actually meant to process not only these updates) that goes through 360 records (stored in sequence), it calculates resulting record, and performs 1 Put operation to store the result back. Fewer operations means using less resources and this leads to more efficient processing. Moreover, if the value which needs to be updated is a complex one (needs time to load in memory, etc.) it is much more efficient to apply all updates at once than one by one individually.

Deferring processing of updates is especially effective when large portion of operations is in essence insertion of new data, but not an update of stored data. In this case a lot of Get operations (checking if there’s something to update) are redundant.

Better Handling of Update Load Peaks

Deferring processing of updates helps handle load peaks without major performance degradation. The actual (periodic) processing of updates can be scheduled for off-peak time (e.g. nights or weekends).

Ability to Rollback Updates

Since updates don’t change the existing data (until they are processed) rolling back is easy.

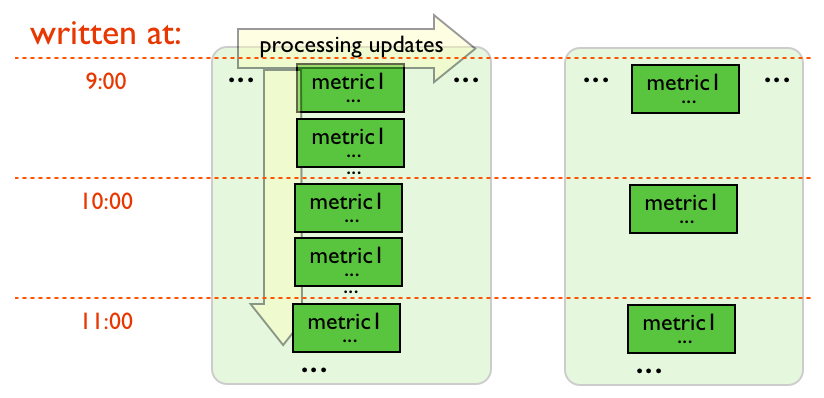

Preserving rollback ability even after updates were compacted is also not hard. Updates can be grouped and compacted within time periods of given length as shown in the picture below. That means the client that reads data will still have to merge updates on-the-fly even right after compaction is finished. However using the proper configuration this isn’t going to be a problem as the number of records to be merged on the fly will be small enough.

Consider the following example where the goal is to keep all-time avg value for particular sensor. Let’s say data is collected every 10 seconds for 30 days, which gives 259200 separately written data points. While compacting on-the-fly this amount of values might be quite fast for a medium-large HBase cluster, performing periodic compaction will improve reading speed a lot. Let’s say we perform updates processing every 4 hours and use 1 hour interval as compacting base (as shown in the picture above). This gives us, at any point in time, less than 24*30 + 4*60*6 = 2,160 non-compacted records that need to be processed on-the-fly when fetching resulting record for 30 days. This is a small number of records and can be processed very fast. At the same time it is possible to perform rollback to any point in time with 1 hour granularity.

In case system should store more historical data, but we don’t care about rolling it back (if nothing wrong was found during 30 days the data is likely to be OK) then compaction can be configured to process all data older than 30 days as one group (i.e. merge into one record).

Automatic Handling of Task Failures which Write Data to HBase

Typical scenario: task updating HBase data fails in the middle of writing – some data was written, some not. Ideally we should be able to simply restart the same task (on the same input data) so that new one performs needed updates without corrupting data because some write operations were duplicate.

In the suggested solution every update is written as a new record. In order to make sure that performing the same (literally the same, not just similar) update operation multiple times does not result in multiple separate update operations, in which case data will be corrupted, every update operation should write to the record with the same row key from any task attempts. This results in overriding the same single record (if it was created by failed task) and avoiding doing the same update multiple times which in turn means that data is not corrupted.

This especially convenient in situations when we write to HBase table from MapReduce tasks, as MapReduce framework restarts failed tasks for you. With the given approach we can say that handling task failures happens automatically – no extra effort to manually roll back previous task changes and starting a new task is needed.

Cons

Below are the major drawbacks of the suggested solution. Usually there are ways to reduce their effect on your system depending on the specific case (e.g. by tuning HBase appropriately or by adjusting parameters involved in data compaction logic).

- merging on the fly takes time. Properly configuring periodic updates processing is a key to keeping data fetching fast.

- when performing compaction, scanning of many records that don’t need to be compacted can happen (already compacted or “alone-standing” records). Compaction can usually be performed only on data written after the previous compaction which allows to use efficient time-based filters to reduce the impact here.

Solving these issues may be implementation-specific. We’ll bring them up again when talking about our implementation in the follow up post.

Implemenation: Meet HBaseHUT

Suggested solution was implemented and open-sourced as HBaseHUT project. HBaseHUT will be covered in the follow up post shortly.

If you like this sort of stuff, we’re looking for Data Engineers!

HBaseCon 2012 is the first-ever HBase-focused conference happening this May in San Francisco. I’m happy to say that

HBaseCon 2012 is the first-ever HBase-focused conference happening this May in San Francisco. I’m happy to say that